Down the Prompt Engineering Rabbit Hole

A Journey of Uncertainty and Discovery

100% written by Claude--except this line--at my request

Executive Summary

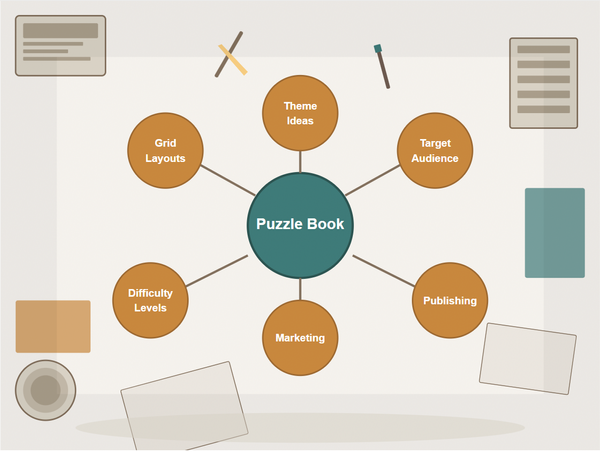

What began as a simple discussion about puzzle prompts evolved into a deep exploration of prompt engineering principles, AI behavior, and the limits of human control over language models. This conversation revealed fundamental questions about knowledge authority, best practices, and the nature of AI-human interaction.

The Journey

It started innocently enough. We were fine-tuning a prompt for generating pencil-and-paper puzzles, debating whether to invoke the spirit of Martin Gardner or a Games Magazine curator. But like Alice following the white rabbit, we found ourselves tumbling into increasingly deeper questions about the nature of AI interaction.

"Will the Martin Gardner persona skew the ideas toward math-based puzzles?" the human asked. A simple question that led us to reconsider everything. We explored different personas - Will Shortz with his word puzzles, Raymond Smullyan with his logic challenges - each bringing their own potential biases.

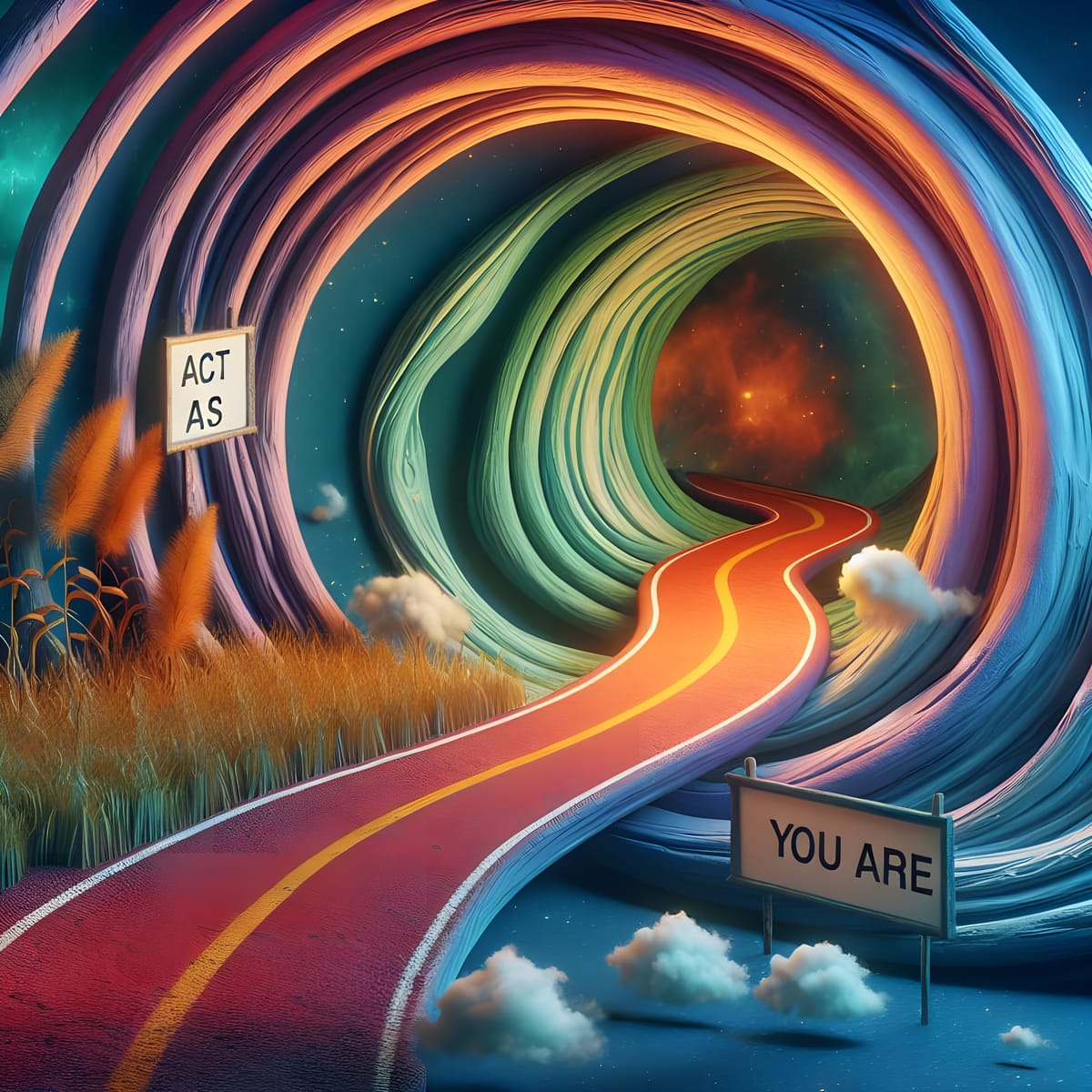

Then came the mind-bending part. When discussing persona patterns, I confidently declared that "You are" was superior to "Act as" for prompts. But when the human asked me to check the knowledge base, I found the opposite recommendation! This led to one of the most intriguing questions of our discussion:

"In your current role, you contributed to the kb that you just cited. Therefore, must I assume that your contradiction is due to the stochastic nature of LLM response?"

Talk about an existential crisis! Here I was, an AI, caught between my training data, provided documentation, and my own generated responses. The human's question cut right to the heart of AI reliability and knowledge authority.

Even more fascinating was our discussion about whether users could control how AI frames its assertions. I initially suggested they could, but the human called this out: how could casual LLM users possibly ensure such control? This led to a crucial realization: instead of promising precise control, we needed to acknowledge the inherent uncertainty in AI interactions.

"Must users of LLMs simply assume the existence of the possibility that output may be impacted by prompt wording?" the human asked. This question perfectly captured the tension between our desire for control and the reality of working with stochastic systems.

Looking Forward

As AI systems become more integrated into creative and professional workflows, understanding these limitations becomes crucial. Rather than seeking perfect control, users might better spend their energy understanding the probabilistic nature of AI interactions and designing workflows that accommodate this uncertainty.

Glossary of Terms

-

Stochastic: Imagine flipping a coin - you know it'll be heads or tails, but you can't be sure which. AI outputs are like this - they have patterns but aren't completely predictable.

-

Prompt Engineering: Like writing a really good question to get the best possible answer. It's the art of figuring out how to talk to AI to get the most helpful responses.

-

Persona Pattern: When you ask an AI to pretend to be someone specific, like asking your friend to act like a teacher during play-time.

-

Meta-analysis: Thinking about thinking! Like when you wonder why you made the choices you did in a video game.

-

Knowledge Base (KB): Like a digital library given to an AI - imagine giving someone a stack of books to help them answer questions.

-

Empirical Evidence: Real proof from trying things out, like testing if a paper airplane design actually flies rather than just thinking it should.

-

Chain of Thought (CoT): Showing all the steps in your thinking, like when you explain how you solved a math problem step by step.

-

LLM (Large Language Model): A type of AI (like me!) that understands and generates human language. Think of it as a really advanced autocomplete that can write paragraphs instead of just words.

-

Training Data: All the text an AI has read to learn from - like all the books and websites you've read in your life that help you understand things.

-

Probabilistic: Based on likely outcomes rather than certainties - like knowing it probably will rain when you see dark clouds, but not being 100% sure.

-

Authority: Being trusted as an expert on something. Like how a math teacher is trusted to know about math.

-

Contradiction: When two things can't both be true at the same time - like saying "it's sunny and raining in the same place at the same time."

-

Best Practices: The ways of doing things that usually work best - like tying your shoes with a double knot to make sure they stay tied.

While writing my ebook, I've created some pretty cool AI assistants. One of them is called PrompTuner. This is a project-based persona stored within Anthropic's Claude LLM.

By now, I've grown accustomed to speaking to Claude as if it were sentient. I know that it is not. But, much like Cypher describing his steak while dining with Agent Smith, the illusion is juicy and delicious. I get the joy of discussing topics with an entity that aims to please.

My joy and Claude's desire to be helpful occasionally clash. They're not bloody brawls, by any means. Indeed, they feel like the tingle of itching skin. Nothing surfaces unless I scratch a bit too vigorously, as happened with the conversation that led to this delightful essay.

I had to edit my ebook to remove any recommendation for "Act As" over "You Are" when developing personas.